- the master

- Posts

- When to Fine-Tune vs. Prompt Engineer [3 Case Studies]

When to Fine-Tune vs. Prompt Engineer [3 Case Studies]

From the real-world case studies of giants like GitHub, Bloomberg, and Harvey AI.

Professionals asked this question multiple times. In today’s newsletter, we'll break down exactly when to choose each path, backed by real-world case studies from giants like GitHub, Bloomberg, and Harvey AI.

In today’s edition:

AI Deep Dive— Fine-Tune vs. Prompt Engineer [With Case Studies]

Build Together— Here’s How I Can Help You

AI Engineer Headquaters - Join the Next Live Cohort starting 3rd September 2025. Reply to this email for early bird access.

[Sponsor Spotlight]

AI leaders only: Get $100 to explore high-performance AI training data.

Train smarter AI with Shutterstock’s rights-cleared, enterprise-grade data across images, video, 3D, audio, and more—enriched by 20+ years of metadata. 600M+ assets and scalable licensing, We help AI teams improve performance and simplify data procurement. If you’re an AI decision maker, book a 30-minute call—qualified leads may receive a $100 Amazon gift card.

For complete terms and conditions, see the offer page.

[AI Deep Dive]

When to Fine-Tune vs. Prompt Engineer [With Case Studies]

It's the million-dollar question.

The wrong choice can lead to wasted months, budget overruns, and AI products that fail to deliver.

The right choice unlocks performance, saves money, and gets you to market faster.

We'll break down exactly when to choose each path, backed by real-world case studies from giants like GitHub, Bloomberg, and Harvey AI.

Let's dive in.

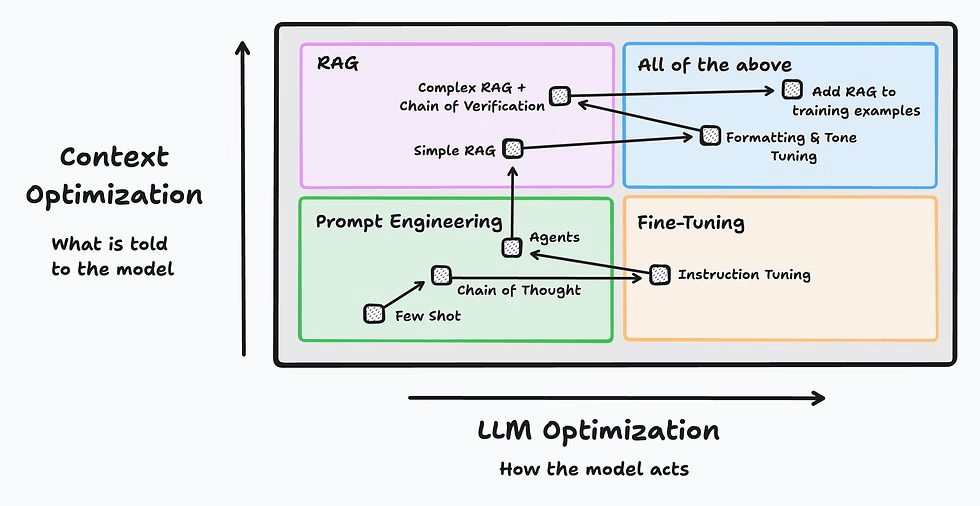

The Core Difference in 10 Seconds

Think of it like this:

Prompt Engineering is like giving a brilliant, generalist consultant (the LLM) a highly detailed and well-structured briefing. You're not changing the consultant, just the instructions you give them.

Fine-Tuning is like sending that same consultant to law school or medical school. You are permanently modifying their internal knowledge to make them a domain specialist.

This distinction is key.

One guides behavior, the other rewrites knowledge.

4 Questions [Decision Framework]

Before diving into case studies, ask your team these four questions.

Your answers will point you in the right direction.

1) Do we have the data?

Fine-tuning requires a dataset of at least 5,000 high-quality, labeled examples.

If you don't have this, the decision is made for you: start with prompt engineering.

2) How stable is our use case?

Are you building a tool for a single, consistent task (e.g., analyzing SEC filings)? Or do you need flexibility for constantly changing user needs (e.g., a general marketing copy generator)?

stable - consider fine-tuning

dynamic - lean toward prompt engineering

3) What's our accuracy threshold?

Can we tolerate an 80% success rate, or do we need 95%+ precision for a safety-critical or legally sensitive application?

good enough is good enough - prompt engineering

mission-critical accuracy - fine-tuning is necessary

4) What's our timeline & budget?

Do we need a solution now with minimal upfront cost? Or are we making a long-term investment for a core product?

fast & cheap (upfront) - prompt engineering

strategic & long-term - fine-tuning

[Case Study - 1] GitHub Copilot’s Prompt Engineering at Scale

You might assume GitHub Copilot is just one giant, fine-tuned model.

You'd be partially wrong.

A huge part of its magic is sophisticated prompt engineering.

Challenge

Provide relevant code suggestions in real-time, across millions of different repositories and programming languages.

Fine-tuning a single model for every project is impossible.

Solution

Copilot doesn't just see the line you're typing.

Its prompt architecture pulls in a massive amount of context:

content of your current file.

code from neighboring tabs you have open.

relevant file paths and repository metadata.

Result

A highly flexible system that adapts to your immediate context without needing to be retrained on your specific codebase.

Key Takeaway from Copilot

For tasks requiring broad, dynamic context, advanced prompt engineering (often combined with RAG) is more effective than fine-tuning.

[Case Study #2] When Fine-Tuning is Non-Negotiable - Harvey AI

In the legal world, "close enough" can lead to malpractice.

This is where fine-tuning shines.

Challenge

Provide legal professionals with accurate, citable answers based on complex case law.

Generalist models like GPT-4 often "hallucinate" case names or misinterpret legal nuance.

Solution

Harvey AI partnered with OpenAI to create a custom model fine-tuned on 10 billion tokens of proprietary legal data.

This wasn't just about teaching the model new facts, it was about teaching it how to reason like a lawyer.

Result

In head-to-head tests, lawyers preferred the fine-tuned Harvey model over GPT-4 responses 97% of the time.

It produced more complete answers with correct citations.

Key Takeaway from Harvey AI

For domains with a unique vocabulary, structure, and reasoning process (like law, medicine, or finance), fine-tuning is essential to achieve expert-level performance and eliminate critical errors.

[Case Study #3] BloombergGPT - The Best of Both Worlds

What if you need both deep domain expertise and flexibility?

You build a hybrid system (my personal favorite).

Challenge

Create a financial research platform that understands deep financial concepts but can also answer questions about current market events.

Solution

A layered approach.

1) Foundation Layer - fine-tuning

Bloomberg created BloombergGPT, a model trained from the ground up on 363 billion tokens of financial data.

This gave it a core, unmatched understanding of finance.

2) Adaptation Layer - prompting

User queries are passed through prompt templates that frame the question for the specialized model and format the output.

3) Context Layer - RAG

For questions about real-time data, the system retrieves current market information and adds it to the prompt.

Result

A system that outperforms general models on financial tasks while remaining flexible and up-to-date.

Key Takeaway

Production-grade AI is rarely a single-method solution.

The most robust systems layer fine-tuned models with dynamic prompting and real-time data retrieval.

When Does Fine-Tuning Pay Off?

This is a critical consideration for any leader.

Prompt Engineering

low upfront cost, but you pay for every token, forever

costs scale linearly with usage

Fine-Tuning

high upfront cost (from ~$3k to $20k+ for training)

the cost per query is significantly lower

Break-Even Point

For applications with high volume (50,000+ queries/month), the initial investment in fine-tuning typically pays for itself in 12-18 months.

After that, it becomes a more cost-effective option.

My Actionable Recommendations

So, what should you do?

1) Start with Prompt Engineering - Always

it’s the fastest way to build a prototype, validate your use case, and learn about your problem space

use it with RAG to get the most out of your existing models.

2) Log Everything

keep a detailed log of all prompts and the model's responses

this log is the raw material for your future fine-tuning dataset

3) Define Your 85% Threshold

prompt engineering can often get you to ~85% accuracy

decide if that's good enough

If your application demands higher performance, reliability, or domain-specific reasoning, it's time to invest in fine-tuning.

4) Hybrid Future

Don't think of it as an "either/or" choice.

Think "prompting now, fine-tuning later, and a hybrid system in production."

That’s it for the AI Deep Dive.

The best AI architects don't choose a single tool, they build a toolbox.

Until next time, keep building.

AI Deep Dive

Today’s deep dive topic is part of my series “AI Deep Dive“

In this series, I am inviting you to submit a real-world problem you are struggling to solve with AI.

Today’s question was: “When to Fine-Tune vs. Prompt Engineer“ and was asked by “Rishabh Rathore [LinkedIn]“ - System Engineer @TCS

Reply to this email with your question.

Want to work together? Here’s How I Can Help You

AI Engineering & Consulting (B2B) at Dextar—[Request a Brainstorm]

You are a leader?—Join [The Elite]

Become an AI Engineer in 2025—[AI Engineer HQ]

AI Training for Enterprise Team—[MasterDexter]

Get in front of 5000+ AI leaders & professionals—[Sponsor this Newsletter]

I use BeeHiiv to send this newsletter.

PS: What do you want to learn next?

Reply